interfaces

19 Oct, 2023

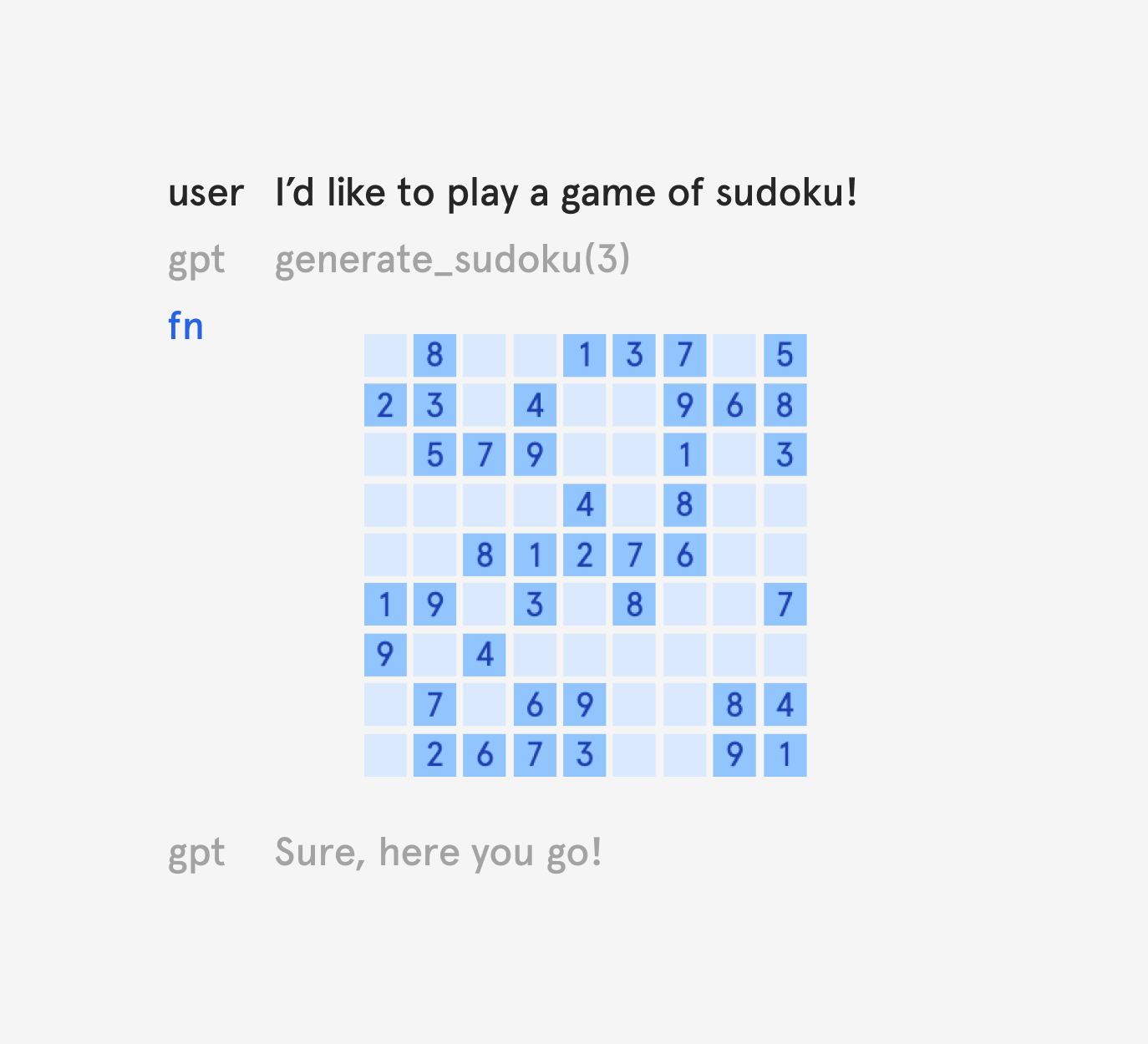

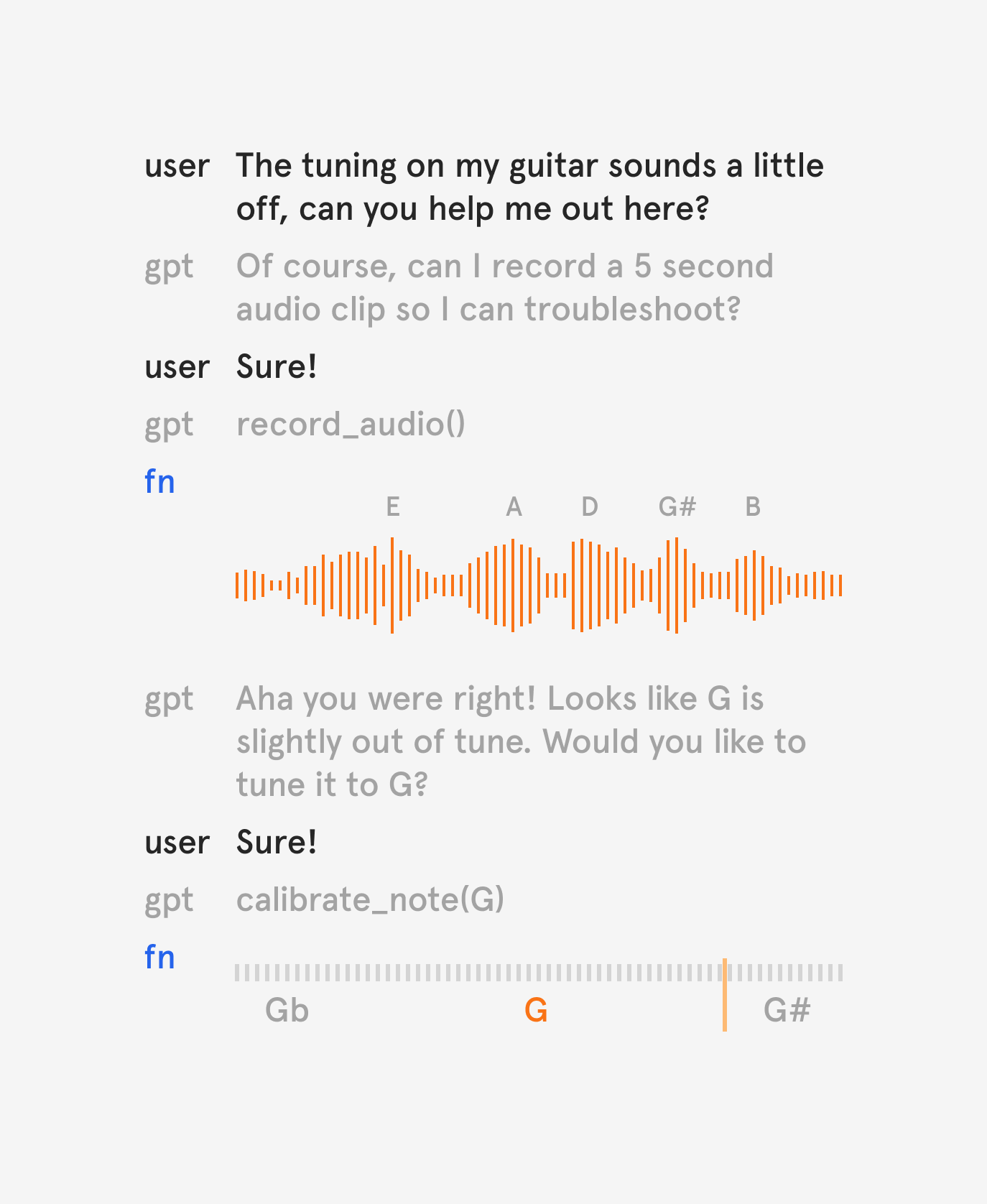

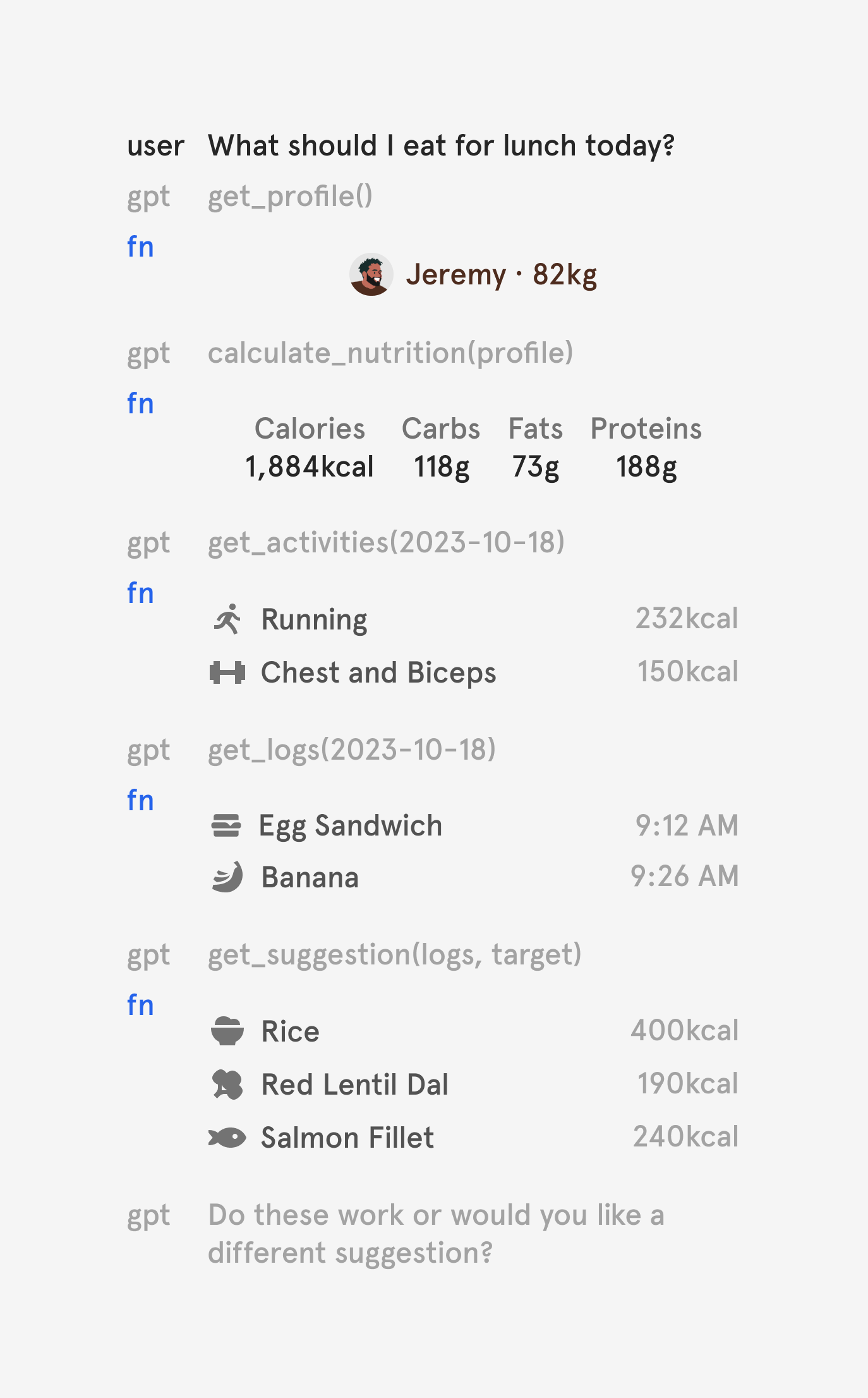

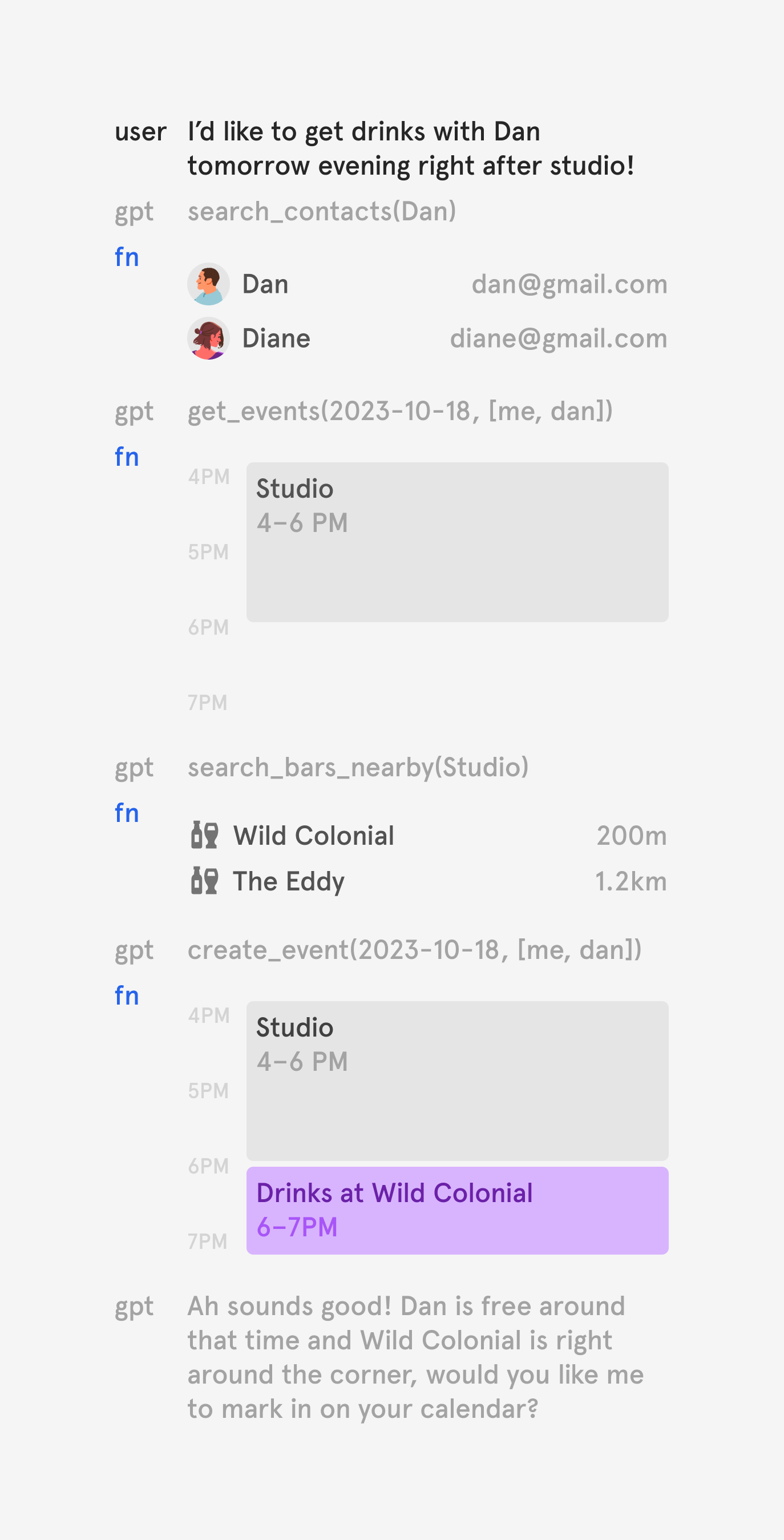

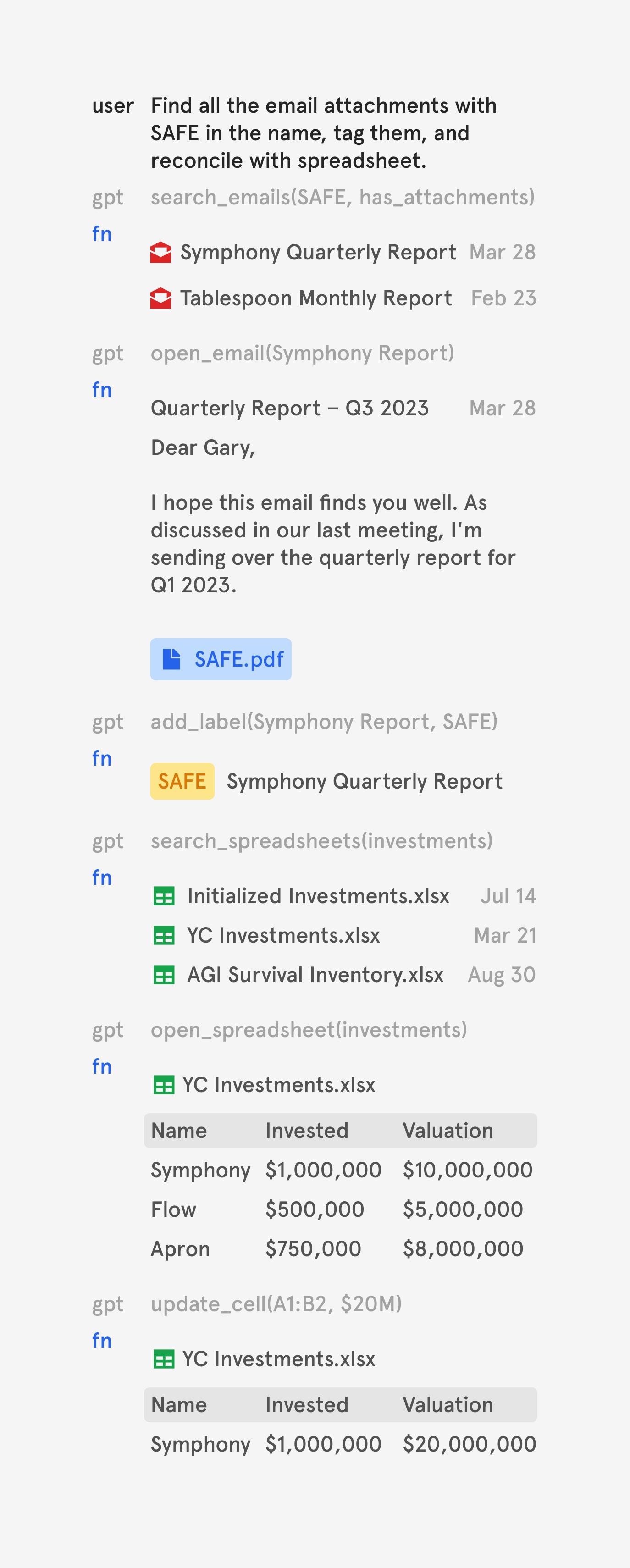

Another interesting consequence of models like GPT-3.5+ being able to call functions is that this ability can be used to render visual interfaces within a conversation.

Most developers choose to hide the result of function calls from users. It makes sense because the result is mostly for models to consume and may not necessarily be human-readable.

However, a more powerful pattern emerges when they're intentionally made visible to users. They can be used not only to render interfaces but also to run programs as part of a conversation.

Here's what that looks like.

Rendering interfaces within a conversation opens up novel ways for humans to interact with language models beyond text.

Interfaces also make it easier for humans to interpret function calls made in sequence. They offer a better way for us to precisely identify and debug exact locations of incorrect reasoning performed by the model.

Symphony natively supports rendering interfaces, and I'm excited to see what developers build with it.

MIT License

Symphony is open source playground exploring these emerging ideas. If you would like to support ongoing development, consider leaving a star!

View on GitHub